Natural language processing comes to Elastic 8.0 searchĪnother key data search improvement in Elastic 8.0 is enhanced natural language processing ( NLP) capabilities. With the nearest neighbor approach, a query result will return the closest neighbors, or adjacent vectors, for a specific query.

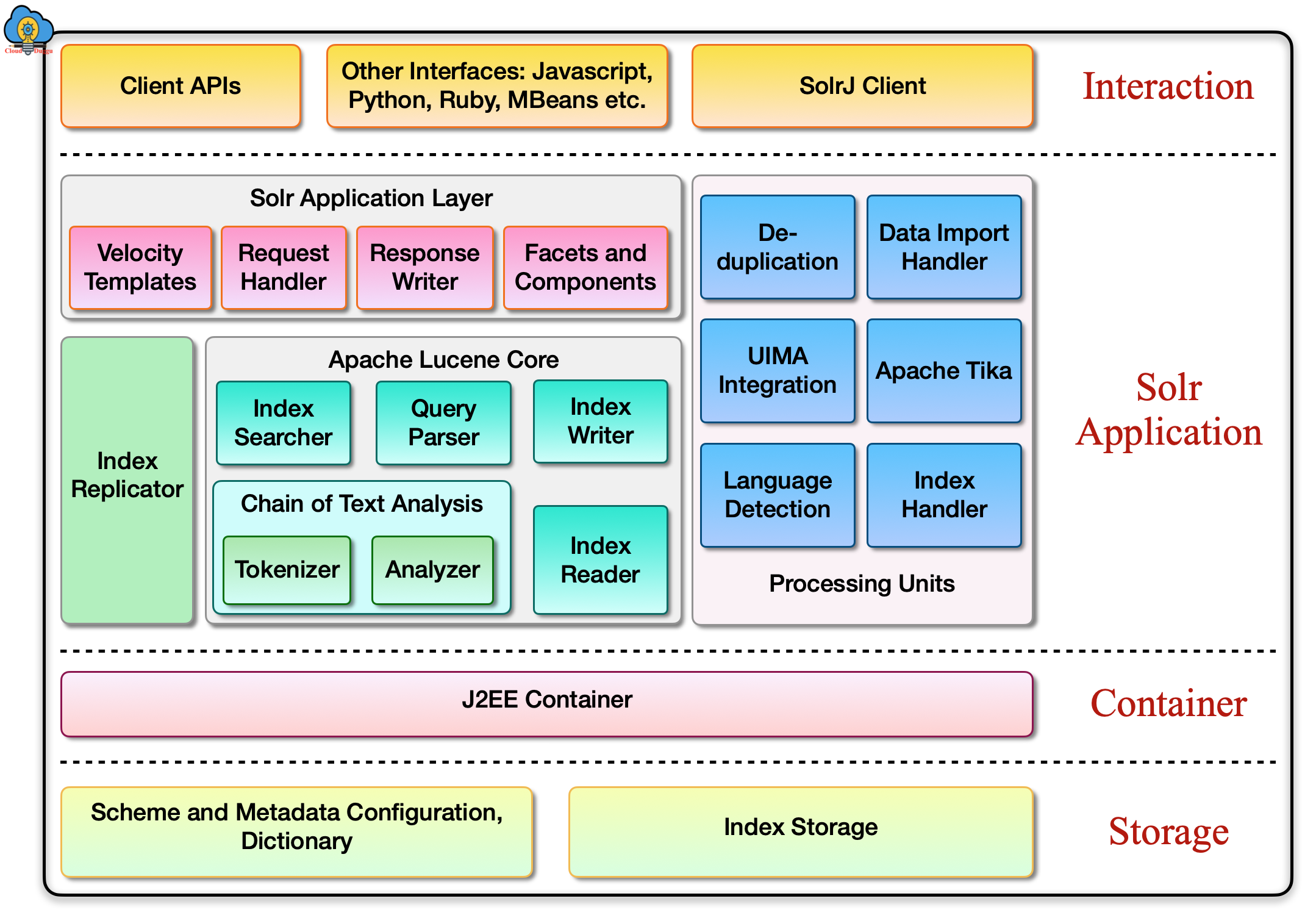

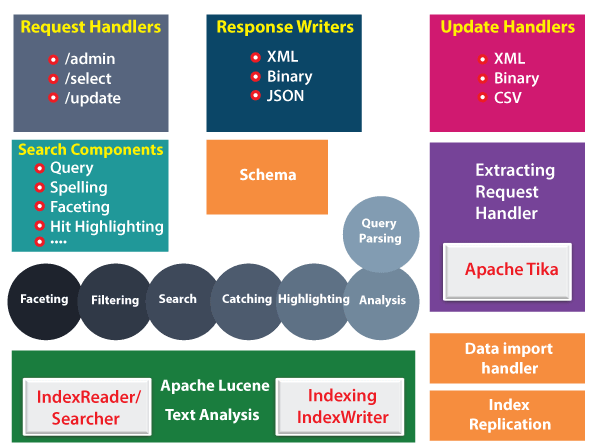

"It requires a fundamentally different matching approach than what has been available previously in Elasticsearch," Riley said.ĭetermining relevance in a vector search is also done with nearest neighbor matching. Search results with vector are not focused on keyword relevance, but rather on the nearness of two vectors. Riley noted that vector search works differently, instead transforming human-readable keywords into a mathematical vector. And relevance has largely involved matching keywords in a query to human-readable keywords in the data, where a search technology calculates frequency to determine appropriate response. Matt Riley, general manager for enterprise search at Elastic, said search typically has been thought of as providing a user with a set of documents or data that is relevant to a given query. The nearest neighbor approach that improves search relevance has its roots in what Elastic refers to as vector search. Elastic 8.0 brings new vector search capabilities Elastic 8.0 now gets improvements from the new Lucene release, Kearns said, including more efficient memory use and indexing speed. Kearns noted that Elasticsearch uses the open source Apache Lucene search technology that was updated to version 9.0 in December 2021. "It is really good at taking in documents and really any unstructured data and making it available for search." "Elasticsearch is a search engine," Kearns said.

#Apache lucene relevance models update#

"The future of enterprise search will include multiple technologies, especially taking advantage of newer AI technologies and techniques." Elastic 8.0 improves search with features from Lucene 9.0įrom a search perspective, the new update is focused on improving both relevance and performance, said Steve Kearns, vice president of product management at Elastic. "That is the type of search technology you would only have seen previously from Google, Azure and AWS," Gualtieri said. Mike GualtieriAnalyst, Forrester Research The future of enterprise search will include multiple technologies, especially taking advantage of newer AI technologies and techniques. So in my opinion all that is needed, is to add a testCrazyBoosts that looks a lot like testCrazySpans, and just asserts those things, ideally across all 256 possible norm values.That is the type of search technology you would only have seen previously from Google, Azure and AWS. the collection-level statistics for the field. All I am saying is, these scoring models just have to make sure they don't do something totally nuts (like return negative, Infinity, or NaN scores) if the user index-time boosts with extreme values: extreme values that might not make sense relative to e.g. Its easy to understand this as "at search time, the similarity sees the "normalized" document length". It means you do not have to reindex to change the Similarity, for example. I don't think we should change this, it makes it much easier for people to experiment since all of our scoring models do this the same way. Boosting a document at index-time is just a way for a user to make it artificially longer or shorter.

It is not a bug, it is just always how the index-time boost in lucene has worked.

0 kommentar(er)

0 kommentar(er)